3D Computer Vision From Point Clouds to URDF Generation

Embed rich media such as videos and LaTeX math

Embed rich media such as videos and LaTeX mathOverview

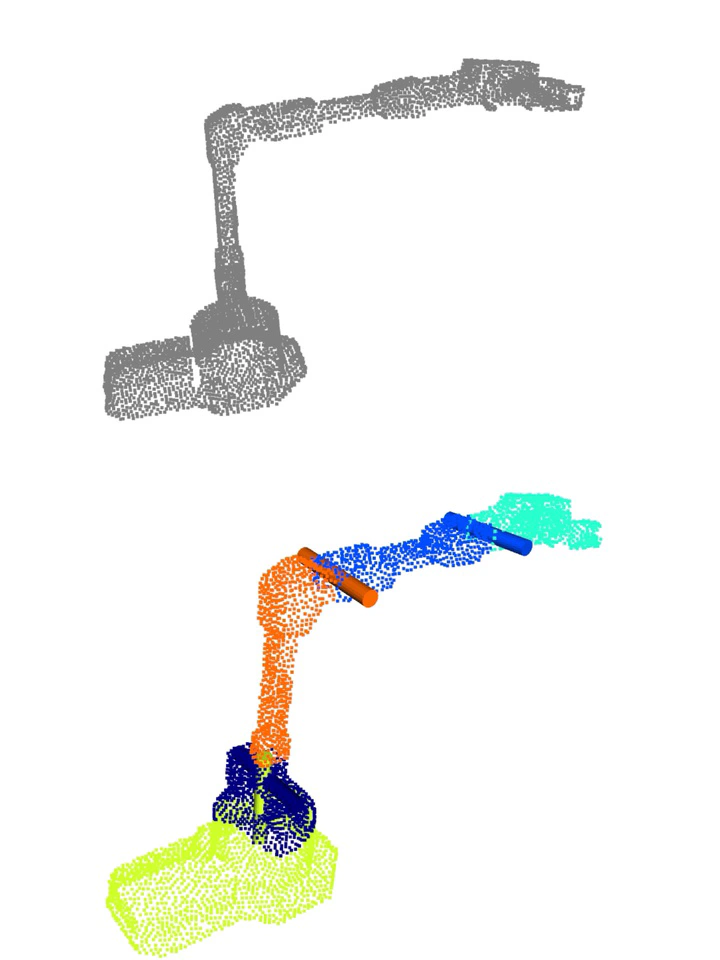

An experimental platform is designed to study the robotic arm’s motion range and camera field of view, using the camera to capture point cloud data of the robotic arm.

Each frame of point cloud data is segmented using the k-means algorithm, dividing it into multiple segments.

A multi-layer perceptron (MLP) model is then trained on these segments, using Chamfer distance as the loss function to compute the transformation matrix for each segment. A distance matrix is calculated to determine the relative distance between pairs of points, aiding in coordinate partitioning during the clustering process.

Before generating the URDF file, we classify the robotic arm’s links into two cases: known and unknown degrees of freedom (DOF). For known DOF, links are directly divided; for unknown DOF, we use the silhouette score method to search for the optimal DOF within a specified range. This method increments the DOF value, clustering at each step and calculating silhouette scores to select the DOF with the best clustering quality.

Using the NetworkX library, a graph structure is created to represent the connections between links. The minimum spanning tree (MST) method is employed to establish the primary link connections, resulting in an acyclic, connected kinematic tree. Based on the segmentation results, a URDF file and corresponding kinematic tree are generated, facilitating the modeling and visualization of the robotic arm’s structure.

In this project, I was primarily responsible for the design and setup of the experimental platform, data collection, partial process optimization, and implementation of some code.(Submitted to CVPR 2025 arXiv:2412.05507.)